Wednesday, 21 March 2018

Clustering Vert.x with Infinispan

Welcome to the third in a multi-part series of blog posts about creating Eclipse Vert.x applications with Infinispan. In the previous blog posts we have seen how to create REST and PUSH APIs using the Infinispan Server. The purpose of this tutorial is to showcase how to create clustered Vert.x applications using Infinispan in embedded mode.

Why Infinispan ?

Infinispan can be used for several use cases. Among them we find that it can be used as the underlying framework to cluster your applications. Infinispan uses peer-to-peer communication between nodes, so the architecture is not based on master/slave mode and there is no single point of failure. Infinispan supports replication and resilience across data centers, is fast and reliable. All the features that make this datagrid a great product, make it a great cluster manager. If you need to create clustered applications or microservices, this can be achieved with Vert.x using the Vert.x-Infinispan cluster manager.

Creating a clustered application

The code of this tutorial is available here.

Dummy Application

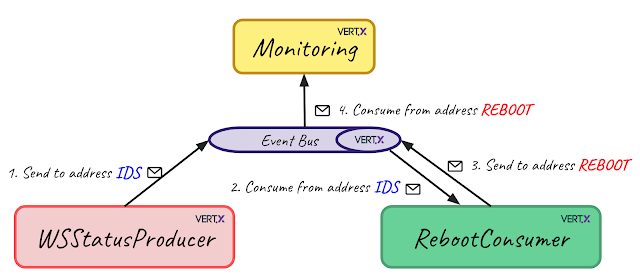

Let’s start with a dummy clustered system with 3 verticles.

WebService Status Producer

Produces randomly [0,1,2] values every 1000 milliseconds and sends them to the event bus "ids" address.

Reboot Consumer

Consumes messages from the event bus "ids" address, and launches a "reboot" that lasts for 3000 milliseconds whenever the value is 0. If a reboot is already happening, we don’t need to relaunch any new reboot. When a reboot starts or ends, a message is sent to the event bus to the "reboot" address.

Notice that:

-

We use a simple boolean to check if there is a reboot going on. This is safe because every verticle is executed from a single event loop thread, so there won’t be multiple threads executing the code at the same time.

-

An ID is generated to identify the Verticle. The message sent to the event bus is a JsonObject

Clustering the dummy Application

To create a cluster of these applications, we just need to do 2 things:

-

Add the cluster manager maven dependency.

-

Run and deploy each verticle in cluster mode. Each Verticle class has a main method that deploys each verticle separetly. Example for the Monitoring verticle.

|

|---|

Running the application, we can monitor the logs |

Each clustered application contains - or embeds - an Infinispan instance. Under the hood, the 3 Infinispan instances will form a cluster.

What if I need to scale

Imagine you need to scale the Reboot Consumer application. We can run it multiple times, let’s say 2 more times. The two new instances will join the cluster. In this case, we have “ID-93EB” ”ID-45B8” and “ID-247A”, so now we have a cluster of 5. It’s very simple but if we have a look to the monitoring console, we will notice reboots are now happening in parallel.

|

|---|

3 Reboot Consumers |

As I mentioned before, this example is a dummy application. But in real life you could need to trigger a process from a verticle that runs multiple times and need to be sure this process is happening just once at a time. How can we fix this ? We can use Vert.x Shared Data structures API.

Shared data API to rescue

In this particular case, we are going to use a clustered lock. Using the lock, we can now synchronise the reboots among the 3 nodes.

|

|---|

Using Shared Data API, one reboot at a time |

Vert.x clustered lock in this example is using an emulated version of the new Clustered Lock API of Infinispan introduced in 9.2 which has been freshly released. I will come back to share about this API in particular in further blog posts. You can read about it on the documentation or run the infinispan-simple-tutorial.

One node at a time

When clustering applications with Vert.x, there is something you need to take care of. It is important to understand that each node contains an instance of the datagrid. This means that scaling up and down needs to be done one at a time. Infinispan, as other datagrids, reshuffles the data when a new node joins or leaves a cluster. This process is done following a distributed hashing algorithm, so not every data is moved around, just the data that is supposed to live in the new node, or the data owned by a leaving node. If we just kill a bunch of nodes without taking care of the cluster, consequences can be harming! This is something quite obvious when dealing with databases : we just don’t kill a bunch of database instances without taking care of every instance at a time. Even when Infinispan data is only in memory we need to take care about it in the same way. Openshift, which is built on top of Kubernetes, helps dealing properly and safely with these scale up and down operations.

Conclusions

As you have seen, creating clustered applications with Vert.x and Infinispan is very straightforward. The clustered event bus is very powerful. In this example we have seen how to use a clustered lock, but other shared data structures built on top of Counters are available.

About the Vert.x Infinispan Cluster Manager status

At the time of this writing, Infinspan 9.2.0.Final has been released. From vert.x-infinispan cluster manager point of view, before Vert.x 3.6 (which is not out yet) the cluster manager is using Infinispan 9.1.6.final and it’s using an emulation layer for locks and counters. In this tutorial we are using Vert.x 3.5.1 version.

This tutorial will be updated with the version using Infinispan 9.2 as soon as the next vert.x-infinispan will be released, which will happen in a few months. Meanwhile, stay tuned!

Tags: vert.x